IMO Prize Lecture 2024: Ensemble Weather and Climate Prediction – From Origins to AI

- Author(s):

- Tim Palmer, Department of Physics, University of Oxford

Ensemble prediction is a vital part of modern operational weather and climate prediction, allowing users to estimate quantitatively the degree of confidence they can have in a particular forecast outcome. This, of course, is vital in helping such users make decisions about different weather-dependent scenarios. The development of such ensemble systems for both weather and climate prediction has played a large part in my own research career. Below is a personal perspective on these matters. I conclude with some thoughts about directions we should be taking going forward, especially in the light of the AI revolution. I am grateful and humbled to have been awarded the WMO IMO medal for this work - many of my greatest heroes are IMO medallists.

The birth of operational numerical weather prediction (NWP) began shortly before 1950 when John von Neumann assembled a team of meteorologists, led by Jule Charney (1971 IMO medallist), to encode the barotropic vorticity equation on the ENIAC digital computer. These early models were not global and could only make useful forecasts for a day or two ahead.

Soon meteorologists began asking how far ahead reliable weather forecasts could in principle be made, given global multilayer models which encoded the primitive equations. Studies by Chuck Leith, Yale Mintz and Joe Smagorinsky (1974 IMO medallist) provided estimates of around two weeks, based on the time it took initial perturbations with amplitudes consistent with initial condition error to become as large as randomly chosen states of the atmosphere. The two-week timescale became known as the “limit of deterministic predictability”. As I will discuss below, it became a misunderstood concept.

I joined the Met Office (UK) in 1977 after a PhD working on Einstein’s general theory of relativity – a childhood ambition of mine. My change in subject was in part driven by a desire to do something more useful for the rest of my research career, and in part due to a chance meeting with Raymond Hide, an inspirational and internationally renowned geophysicist then working at the Met Office. My early work at the Met Office was on stratospheric dynamics. With my colleague Michael McIntyre from the University of Cambridge, we discovered the world’s largest breaking waves, and in so doing kick-started the use of Rossby-Ertel potential vorticity as an insightful diagnostic of planetaryscale atmospheric circulation. The general absence of such breaking Rossby waves in the Southern Hemisphere is critically important in explaining why the ozone hole was first discovered in the Southern Hemisphere and not in the Northern Hemisphere, as had been expected.

In this way, I had become an expert in stratospheric dynamics and was promoted to become a Principal Scientific Officer and hence group leader at the Met Office. By a quirk of the UK scientific civil service, this meant I had to move field since there were no vacancies for group leader in the stratospheric group. Indeed, the only research-leader vacancy was in the long-range forecasting branch. And so there I was posted, not knowing anything about long-range forecasting.

In those days, long-range forecasting – on month-to-seasonal timescales – was performed with what were called statistical empirical models. Now, we would call them data-driven models. My job was to introduce physics-based models into the operational long-range forecast system. Early work by J. Shukla (2007 IMO medallist) and Kiku Miyakoda indicated that such models – if they are driven with observed sea-surface temperatures – are in principle able to forecast the planetary-scale flow on these long timescales.

The statistical empirical model output was probabilistic in nature. They would, for example, provide probabilistic forecasts of the occurrence of predefined circulation regimes over the UK (known as Lamb weather types). Hence if physics-based models were to be integrated into the long-range forecast system, the forecast output also had to be probabilistic in nature. My colleague James Murphy and I developed a relatively crude ensemble forecast system based on the Met Office global climate model, using consecutive analyses to form the initial conditions, from which such probabilities could be estimated. This, I believe, was the world’s first real-time ensemble forecast system. It began producing probabilistic ensemble forecasts in late 1985.

Early in 1986 I had what might be called a lightbulb moment: Why aren’t we using the ensemble forecast system on all timescales, including the medium-range and (even) the short range? I joined the European Centre for Medium-Range Weather Forecasts (ECMWF) in 1986 to try to develop and implement such a system, but I met with some pushback from colleagues. Their argument was that whilst it was fine to use probabilistic ensemble methods to forecast on timescales longer than the limit of deterministic predictability, that is to say on month-to-seasonal timescales, the forecast problem on timescales within this limit was essentially deterministic in character. We should therefore use new computer resources, when they became available (this was the era of Moore’s Law), to improve the deterministic forecast system – notably by increasing model resolution – rather that make multiple runs of an existing model.

I felt that this argument was based on a misunderstanding of this notion of “limit of deterministic predictability”. My view was that it should be thought of as characterizing the predictability of weather on average, meaning over many instances. There will be instances when such predictability is shorter than the 2-week average, and instances where the predictability of weather is longer than the 2-week average. The key purpose of an ensemble forecast system is to help determine, ahead of time, whether the weather is in a more or less predictable state. I argued that if media forecasters make forecasts with more confidence than the predictability of the flow deserves, the public will lose confidence in meteorologists when things go wrong. (In truth, there were so many instances where the deterministic TV forecasts had gone wrong that the public treated weather forecasting as a rather dark art, to be taken with a large grain of salt.)

Ed Lorenz (2000 IMO medallist) developed his iconic model of chaos to show that the atmosphere did not evolve periodically. However, as shown in Figure 1, his model also illustrates well the concept that the growth of small initial uncertainties, and hence predictability, is state dependent, even in the early stages of a forecast. There can be occasions when small uncertainties grow explosively. Lorenz’s model makes it clear that the notion of a limit of predictability is indeed a statistical one, obtained by averaging over many initial conditions.

Whilst some of my colleagues may have thought Lorenz’s model was too idealized to be useful, nature spoke decisively in the second half of 1987. The famous October 1987 storm, which ravaged large parts of southern England, had not been forecast the day before. The media called for the resignation of then Met Office Director General, John Houghton. The UK public had indeed lost confidence in the nation’s weather forecast service.

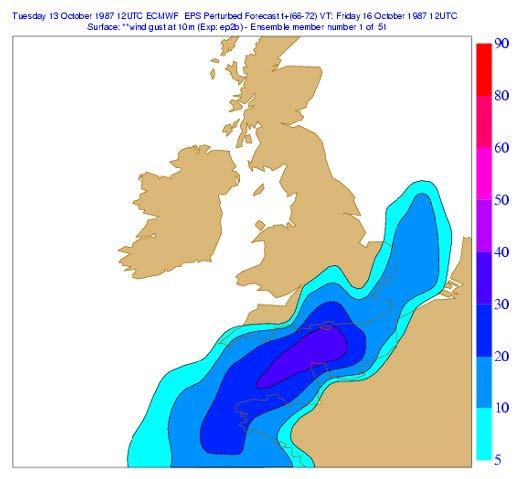

My ECMWF colleagues and I retrospectively ran our nascent medium-range ensemble forecast system on this case, and to our relief it showed an extraordinarily unstable and unpredictable flow over the North Atlantic. An ensemble of 50 forecasts at 2.5 days lead time showed ensemble members whose weather over southern England ranged from a ridge of settled weather to hurricane force wind gusts. The individual ensemble members are shown in Figure 2.

But how to communicate such an ensemble to the public? Some colleagues felt – and some still do – that one should average over the individual ensemble solutions and communicate the ensemble-mean. Whilst the root-mean-square error of such an ensemble-mean forecast would be relatively small, an ensemble average would necessarily damp out the extreme weather solutions. Unless the extreme weather is exceptionally predictable, which it rarely is, an ensemble-mean forecast is useless in warning of extreme weather.

I argued that the forecast probability of (say) hurricane force wind gusts should be communicated directly to the public. At the time, many forecasters argued that the public would never understand the notion of probability. My own feeling was that the public understood well the odds on a horse winning a horse race. Why should weather be any different? The probability of hurricane force winds from the ensemble in Figure 2 is shown in Figure 3. Given that “in Hertford, Hereford and Hampshire, hurricanes hardly ever happen”, a probability of around 30% is very significant indeed and would have alerted the public to the risk of an exceptional weather event.

The success of the ensemble forecast system in retrospectively forecasting the risk of extreme weather in October 1987 was important in convincing colleagues that a centre like ECMWF should take probabilistic ensemble prediction seriously – even though its main task was to make forecasts within the limit of deterministic predictability. The ECMWF Ensemble Prediction System was implemented operationally in 1992: it was the work of many individuals whom I acknowledge in my book The Primacy of Doubt. Colleagues from the National Centers for Environmental Prediction (NCEP) implemented their medium-range ensemble forecast system at the same time. (The NCEP team was then led by a sadly departed colleague Eugenia Kalnay, 2009 IMO medallist, with whom I had many friendly scientific discussions on the most appropriate method to generate initial ensemble perturbations.)

Today, ensemble prediction has become an integral part of weather and climate forecasting on all timescales, from the very short range to climate change timescales and beyond. To some extent, seeing the way in which ensemble forecasting has spread around the world reminds me of Max Planck’s adage: you rarely convince your opponents, rather a new generation comes along for whom the idea is obvious.

As part of this transition to ensembles, forecasts of rainfall on Weather Apps are given as probabilities. Whilst the public may not know that these probabilities are formed from ensemble forecasts, they do understand that they provide estimates of forecast uncertainty. Obviously, a forecast of 100% chance of rain is more certain than a forecast of 20% chance of rain. The public understand these forecasts, which help them in making decisions. This could be for something as trivial as whether to pack waterproofs for a hike in the hills. If the probability of rain is 20%, then it is 80% likely you won’t need the waterproofs – which you would otherwise have to pack in your backpack, making it a little heavier than it would otherwise be. But if it did rain and you hadn’t brought your waterproofs, the rest of the walk would be very uncomfortable. Of course it is up to the user, and not the forecaster, to decide whether to pack the waterproofs. In this case the user must weigh up the risk of getting soaked (probability of rain times inconvenience of wet clothes) against the inconvenience of a heavier backpack. If the likelihood of rain was 5% then perhaps it wouldn’t be worth packing rainproofs. If it was 80% then perhaps it would be worth it. Where exactly the dividing line is between packing and not packing is a matter of choice. Forecasts with estimates of uncertainty help the user make better decisions.

One of the most important applications of medium-range ensemble forecasting is for disaster relief. Until recently, humanitarian agencies sent in food, water, medicine, shelter and other types of aid, after an extreme weather event had hit a region. The deterministic forecasts were simply too unreliable for these agencies to take anticipatory action. However, because of ensemble forecasts, matters have changed. Like the dividing line between packing waterproofs or not, ahead of time an agency can estimate objectively a probability threshold for an extreme event, above which anticipatory action can be taken, and below which it should not be taken. If the forecast probability for a specific event exceeds this threshold, then it makes objective sense for anticipatory action to be taken.

We are in the midst of a revolution

Towards the end of 2023, a revolution happened. A group at the company Huawei showed that it was possible to forecast the weather using artificial intelligence (AI). Not only that, ECMWF’s deterministic headline scores were matched by the AI system. In some sense, this was a return to the days of statistical empirical models – given lots of data, develop code which would uncover correlations in the data which can be used to make predictions. No knowledge of the laws of physics needed!

Since then, AI has been used to create ensemble forecast systems, again with comparable levels of skill of the ECMWF ensemble (though I haven’t yet seen whether they could have forecast the probability of hurricane force winds in 1987 as shown in Figure 2). In the words of the Met Office’s Chief AI Officer: “We are in the midst of a revolution in AI where the world’s fastest growing deep technologies have the potential to rewrite the rules of entire industries, changing the way we live.”

Indeed, will we need numerical weather prediction at all in the future? Will weather prediction become dominated by the commercial sector with their cheap-to-run AI models? Time will tell. However, I believe that in the future, weather forecasting will be a mixture of data-driven AI and physics-based numerical weather prediction. In a sense, we will return to the days when I began developing ensembles for the month-to-seasonal timescale, and the operational forecasts blended the statistical empirical models and the physics-based models.

In Oxford, we have been working on such a mixture. Currently, national weather services run limited-area models to downscale global forecasts to their region. With global ensemble forecasts, this becomes computationally expensive. Indeed, no National Weather Service can afford to create 50 regional downscaled forecasts over the 2-week range of the global forecast system. As a result, valuable information is lost when the downscaling is performed. As an alternative, we have developed a Generative Adversarial Network to perform the downscaling coupled to the ECMWF global ensemble. With this piece of AI, a probabilistic downscaled regional forecast over the whole 2-week forecast period becomes possible at very modest computational cost. We have tested the combined NWP-AI system over the UK using radar data for proxies of precipitation. It appears to work well and the downscaled forecast is more skillful than the raw global ensemble. We are now currently testing the NWP-AI system in East Africa. If it works there, then it should work anywhere in the world. This method has the potential to change the way in which operational weather forecasts are made, worldwide.

Of course, there is a theoretical reason why it is best to try to combine physics-based and AI-based models. With climate change, individual weather events are now occurring with such intensity that they lie outside an AI’s training data. It is almost a truism that extrapolation is more unreliable than interpolation in statistics. To maintain trustworthiness in our forecasting systems, it is vital to continue to include the physics-based models.

Indeed, when it comes to the climate timescale, I would argue that there is no alternative than to continue to use physics-based models for regional or global projections of climate change.

Whether it is estimating the likelihood of passing a tipping point, or of some acceleration in climate change due to positive cloud feedback, we cannot rely on AI estimates based on training data which have not seen tipping points or changing global cloud feedback.

I would argue that the climate change problem is itself reason enough to keep physics-based models for weather forecasting too. I am a great believer in what is called seamless prediction. By testing whether an NWP model can accurately forecast tomorrow’s maximum temperature, we are implicitly testing the cloud schemes and land surface scheme in this model. A systematic bias in a short-range forecast of maximum temperature is indicative of something wrong in the model’s parametrized physics – such as in the cloud schemes or land-surface scheme. And, of course, if there are biases in such schemes, we cannot trust that model’s prediction of climate change on the century timescale.

So, how should NWP systems develop to take maximum advantage of this NWP-AI synergy? Personally, I think the most important development now needed in NWP is the development of km-scale global model. As well as being critically important for NWP, it will be important for regional climate prediction too.

A key point about km-scale models is that three important physical processes, deep convection, orographic gravity-wave drag and ocean mixing, will be at least partially resolved. Hopefully this will allow some of the systematic errors that arise when these processes are parametrized to reduce. Of course, there will continue to be uncertainties in the computational representation of the equations of motion. But these uncertainties can be represented using stochastic techniques.

No doubt km-scale modelling will happen if we wait long enough. However, if we extrapolate the resolution of Climate Model Intercomparison Project (CMIP) models, we will not reach km-scale at least until the mid 2050s (Andreas Prein personal communication). This is not good enough in my opinion. If we are going to reach irreversible tipping points or changing cloud feedback in our climate system we need to know about them now, not when it’s too late.

My own view is that we need some kind of “CERN [Conseil Européen pour la Recherche Nucléaire] for Climate Change” where modelling centres around the world can pool human, computer and financial resources to develop a new generation of seamless km-scale global weather/climate model. Those who argue that we need multiple models in order to represent model diversity and hence uncertainty, I would say that we can represent such diversity better with stochastic representations of unresolved processes. Why better? Because each member of a multimodel ensemble erroneously assumes that the parametrization process is deterministic, which it is not. This is a class error that can be alleviated in a stochastic model.

Some say that if we put computing resources into a km-scale global model, we will not have computing resources to run large ensembles. However, my view is that this is an area where a hybrid AI, physics-based model system could be transformative. The idea would be for the AI system, trained on output from the km-scale model, to generate synthetic high-resolution ensemble members. In this way, based on a skeleton ensemble of maybe 20 members, we could generate ensemble sizes of thousands using AI.

Of course, we will continue to need a hierarchy of climate models. These km-scale models will lie at one end of the hierarchy, which will include both CMIP models and highly idealized models like Lorenz’s model of chaos.

I would like to conclude by thanking WMO, not only for this award, but for helping my career develop. The workshops and conferences organized under WMO auspices helped me to meet scientists from different parts of the world, and, importantly, from different areas of weather and climate science, that I would otherwise not have met. These workshops were critical in providing the seeds of ideas that I have discussed in this essay.

Acknowledgement

The work I have described above could not have happened without the help, inspiration and guidance of many colleagues, a number of whom are listed in my book The Primacy of Doubt.

Further Reading

Palmer, T.N., 2019: The ECMWF ensemble prediction system: Looking back (more than) 25 years and projecting forward 25 years. Quart. J. Roy. Meteorol. Soc., 145, Issue SI, 12-14. https://doi.org/10.1002/qj.3383.

Palmer, T.N., 2022: The Primacy of Doubt. Basic Books and Oxford University Press.